Let’s discuss why every website owner should use Google Search Engine Console while working on Search Engine Optimization. Before starting, think about what Google Search Console is and why we need to use this.

Is Google Search Console necessary or not? If it is essential, then why? If we don’t use Google Search Console, then what will happen? Just Think and then Answer!!!!

Google Search Console

It is also known as Webmaster Tool or Internet Master Tool. It is a free tool offered by Google Services that will help monitor and maintain your Website’s online presence and performance in google search results.

Here you can analyze your Website’s daily performance. Google Search Console gives you various data like user queries, how often users visit your Website, eCommerce conversion rate, etc.

It has tools that let webmasters do the followings:

- Check and Submit a sitemap.

- Check and fix the crawling issues, and see statistics about when Googlebot accesses a particular Website.

- Write and check a robots.txt file to detect pages that are blocked in robots.txt accidentally.

- List internal and external pages that link to the site.

- Get a list of links that Googlebot had trouble crawling, including the error that Googlebot received when accessing the URLs in question.

- Set a preferred domain that decides how the Website URL is shown in SERPs.

- Key points to Google Search elements of structured data, which are used to develop search hit entries.

Now, let’s discuss the 10 reasons every website owner should use it.

HTML improvement

HTML improvement displays meta descriptions, title tags, and indexable data, which the webmaster must address. The HTML improvement report can pinpoint some common issues regarding metadata and indexable content, including:

- Duplicate meta descriptions-whenever descriptions are copied from somewhere

- Long meta description-if meta description is too long

- Short meta description-if meta description is too short

- Duplicate title tags-whenever title copies from somewhere

- Long title tags-whenever the title is too long

- Short title tags-whenever the title is too short

CODE

Below is the code of the basic HTML page. The Head section shows titles and meta tags, and the body section shows all displayed parts, meaning the content appearing on your mobile or laptop screens.

<!DOCTYPE HTML>

<html>

<head>

<title>Title</title>

</head>

<body>

<h1>Headings</h1>

<p>Paragraph</p>

</body>

</html>

HTML improvement report

HTML improvement report tells you whether your Website’s title and meta description tags are too long, too short, non-informative, etc. This report also shows you pages that do not have a title or meta description.

The values that an HTML improvement report presents are tremendous. It improves the Website’s user experience, which is very important for ranking, traffic, and revenue potential.

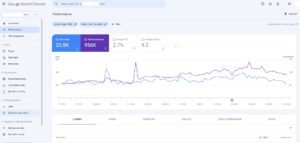

Search Analytics and Insights

Search Console statistics help you focus on creating content with a quick and responsive overview. This is performed by data from both Google Search Console and Google analytics.

How does it help?

HTML improvement can help Website owners, bloggers, content creators, etc., better understand their content’s performance. These are some questions which can be answered by it:

- How are your new parts of content performing?

- What do people explore on Google before they visit your content?

- How do people find your content on the Internet?

How can you get an entry on google search console insights? There are few actions to get an entry on google search Console insights:

- Choose Search Console insights from the top of Google search Console’s page to enter them in the dashboard.

- Use the link to approach it straight and save a bookmark in google chrome or Firefox.

Who can use Google search console insights?

It’s a component of the Google search console, so anyone with a verified Google Search Console property can use it. You can also link your Website’s Google Search Console property with your Website’s Google Analytics (For best results in ranking and indexing) property to get much more and much better Insights about your content.

Links to Your Site

Backlinks are the most important if you want to rank in search engines. Your Web pages will perform nicely in search engines if they have more backlinks from unique websites. So backlinks are the prime concern for Search Engine Optimization.

There are two ways to find who connects to you. First is related with GSC. Google Search Console gives the best starting point for most people as it’s free. To see who links to your Website, go to:

Search Console-> select property -> links -> External links -> top linking sites

This report will show the top 1000 linked pages.

Now let’s discuss the stepping stone of using Google Search Console information:

- All reports are restricted to the top 1000 pages.

- No grade metrics.

No grade metrics means, when Google says top connecting sites, they are not referring to the quality of displayed websites but simply the number of times they link to your Website.

The second method to know about Backlinks refers the Ahrefs site explorer.

Ahrefs site explorer

Ahrefs includes the world’s most extensive index of live backlinks. It’s constantly updated with new information every 15 to 20 minutes, so our robots tirelessly crawl and recrawl the entire web. Connect any Website to Ahref’s lookout to display many backlinks and referring domains it has.

Site lookout ->Enter Website, web page or subfolder (whatever you want)->Choose Mode-> overview

To view every Website linking to your selected mark, head to the Referring Domains reports.

Site lookout->Referring Domains.

Like the google search console, it displays the linking websites and the multiple backlinks from each. It also displays additional SEO metrics like:

- Domain Rating- It shows the strength of backlinks of a Web page or Website.

- Estimated organic traffic to each linking domain- It displays the organic traffic of a Web page or Website.

- No followed vs. do follow links – It displays DoFollow and NoFollow Backlinks of the Website

- First, seen- It shows details about viewing

There are Three powerful ways you can use to link your data to enhance your SEO and get more organic traffic

- Save the value from links you already have- means learning values and apply on SEO.

- Copy opponent’s links-mean that you can copy your opponent’s Backlinks.

- Display your content to possible linkers- means to show your content as much as you can to Visitors.

Manual actions

The best part of the Google Search Console is that it gives a report to you for issues. In the google search Console, Google describes how to read and take action on the facts found in the manual action report.

Website owners can use the manual action report in the Google Search Console to improve their Website performance. That’s necessary because if a site has a manual action, all of that Website will not be displayed in Google search results which means your Website will not appear in google.

What is manual action?

Google algorithm cleans most parts automatically because it is potent at detecting spam. However, Google scans Websites for cases where its policies and guidelines are not followed.

Therefore, they need an actual person for manual investigation because these cases are more challenging to detect algorithmically; that’s why the word ‘ manual action ‘ comes and it also plays an important role.

Here are a few offenses that result in a manual action punishment.

- Pure spam: This is black hat SEO and involves numerous techniques.

- Thin content with no added value: It happens when a Website has some low-quality or shallow pages that do not provide any value to the Visitors.

- Structured data issues: A penalty or punishment is applied when a Website uses structured information that falls outside Google’s guidelines. So you must follow the guidelines for ignoring penalties.

Site owners can also check the manual action report to see if they currently have any penalties or punishment against them. If they have any penalty action against them, they have to resolve it.

Mobile Usability

Mobile data traffic will proliferate in the coming years also by records. So your websites must be more user-friendly to gain the right traffic, which means the Website must be responsive for mobile devices.

In simple terms, responsive means the Website must run on tablets, desktops, laptops, mobiles, etc. If a Website is not working on mobile devices, then it’s not a user-friendly website.

Here user-friendly means websites have to run on multiple screen sizes. Because in Today’s era, everyone uses the Internet on mobile phones, laptops, desktops, etc. As in earlier days, no one is going to cyber cafes or using a laptop or desktop to find anyone’s Website. Today everything is on Mobile.

Why do we perform Mobile website testing?

It helps in checking the Responsiveness of your Website over multiple smartphones (screen sizes), but it also shows some advantages, like-

- To create Websites that are Easily Accessible.

- To create Websites that are compatible with mobile devices

- To make the Websites that are easier to find

- To provide speed and accuracy to the Website

- To enhance the user experience of the Website

- To enhance the Website’s appearance in Search Engine Results.

How to perform Mobile Website testing?

- Browse for Responsive Testing

- Use iOS & Android emulator (that enables one computer system)

- Use the Browser tool

- Test on actual devices (live test on multiple devices)

- Validate the HTML and CSS code

Nowadays, Mobile phones have become the first option to explore the Internet for visitors because it’s easy and convenient. So, now it has become crucial for businesses to make their Website mobile friendly.

That’s why Today, clients want a responsive Website. For this, you are required to execute mobile Website testing on various mobile phones (For Developers, test multiple screen sizes on chrome by inspecting).

Google can find and index your Website.

No one can see you if you do not follow the guidelines of google. You are invisible (hidden) if Google doesn’t index your Website. So indexing your Website on google is a crucial part.

What is crawling and indexing?

Google finds new web pages by crawling the web, and they add those pages to their index. So it can come fast enough when a user searches for anything. Following are the technical terms, you should know about them.

- Crawling

- Web spider

- Indexing

- Googlebot

How to get indexed by Google

First, check whether your Website is appearing on google or not. If your web page isn’t indexed in Google, follow these thumb rules.

- First, Go to Google Search Console

- Move to the URL inspection tool.

- Paste the URL you want to check for appearance in search engines, into the search bar.

- Wait for Google to scan the URL

- Then press the request indexing button if your Web page is not already indexed in Google.

To handle all types of crawling errors, Google Search Console is the best option. Search Engines, like Google, use search bots to gather specific web page parameters. The process of collecting this information is called crawling.

Issues with meta tags or robots.txt

Suppose meta tags or robots.txt have any issues. In that case, it can be resolved quickly by checking your meta tags or the robot’s txt file. That’s why we need to see these following issues first. These issues can easily be detected and handled by Google Search Console.

- URL error

- Outdated URL

- Pages with denied access

- Server errors

- Limited server capacity

- Web server misconfiguration

- Format errors-when there is any fault in formats

- The wrong page in the sitemap

- Issues with internal linking

- Wrong redirects-redirect of your page or site should be in the correct format.

- Slow load speed

- Page duplicates caused by poor website architecture

- Wrong JavaScript and CSS usage

Robots.txt tester

The Robot.txt checker tool checks that your robots.txt file is correct and free of errors. Robots.txt is a text file that is part of your Website and provides indexing controls for search engine robots to confirm that your Website is crawled correctly and that the most important content on your Website is indexed first.

Why is it important to check our Robots.txt file?

It can damage your SEO score if problems stay with your text File or you do not have a robot.txt, and it directs your Website for not ranking well in search engine result pages. So checking the file before your Website is crawled means you can avoid problems. That’s why it’s compulsory to check our Robot.txt file.

Sitemaps

Sitemap submission is necessary for the Website Indexing

When we talk about a sitemap, the first question arises in our mind is that- What is a sitemap, and What is its role in SEO? If we are not using a sitemap, then what will happen? So let’s talk about that.

In simple terms, a sitemap is a file that provides all information about your websites, like videos, images, and other files, and tells the relationship between them. For example, Google Search Console crawled your Website and indexed it according to your sitemap.

A sitemap is a file that lists all the web pages of your Website that you would like search engines to know about and consider for ranking and improving performance.

For your new Website, there are very few external links coming to your site, so crawlers may have a hard time locating all of the pages on your Website. Therefore, a sitemap is crucial for a website for indexing and crawling in Search Engines.

There are four kinds of sitemaps:

- Normal XML sitemap-act as a road map of different pages of any Website

- Video sitemap-contains information about videos on various pages

- News sitemap-contains information about the news on other pages

- Image sitemap-contains information about images on different pages

Why is it essential???? It’s essential because Search engines (like Google, Yahoo, and Bing) use your sitemap to find different pages on your Website.

Security Issues

Security Issues and cyber attacks are the prime concern in Today’s world. Google describes the various kinds of security problems reported by the google search Console as Hacking and social engineering.

Google always gives security updates. The type of security problems reported by Google Search Console can fall into one of two classes-Hacking or social engineering.

Hacking

In simple terms, misuse of your devices or computer is called hacking. Hackers send you a link through email, messages, etc., and when you click on them, you get hacked.

There are three kinds of hacking:

- Injection of code

- Injection of content

- Injection of URL

Social engineering

Social engineering is a technical term that is used for a big range of malicious activities performed by human interactions, or sometimes by the maliciously coded program. It uses psychological manipulation to deceive visitors into making security mistakes or giving away valuable and sensitive information.

Examples of social engineering

- Deceptive content

- Deceptive ads

- Pretexting

- phishing

Some other types of security issues are as follows.

- Uncommon downloads

- Harmful downloads

- Harmful emails

- Unclear mobile billing

- Malware

- Email hacking

- Ransomware

- Brute Force attack

In Today’s era, protecting our data is the most necessary part for everyone. Everyone wants to protect their data from attackers.

There are many ways to solve security issues are-

- Use Anti-Virus Software to secure your system.

- Use Locks in the most straightforward and Crucial manner.

- Use Firewalls to protect your network.

- Using VPN (Virtual Private Network), if you use public networks like coffee shops, hotels, malls, etc., you need to protect yourself. For that, you need to use Virtual Private Network.

- Using strong and unique passwords like secure@123, name_678@5, etc.

- Use Two-factor authentication because it secures your system in two layers-First layer and Second layer

- Update your system regularly

- secure your data with backups

- Increase Self Awareness

If you’re looking for a professional and friendly team to help with your Google Search Console, then don’t hesitate to get in touch for further information, in case, you need help from a specialist, you can contact us on askdigitalseo@gmail.com.